Goal:

To create a customizable neural network capable of being implemented in small embedded systems. I plan to integrate this library into my upcoming embedded systems.

Previous neural networks:

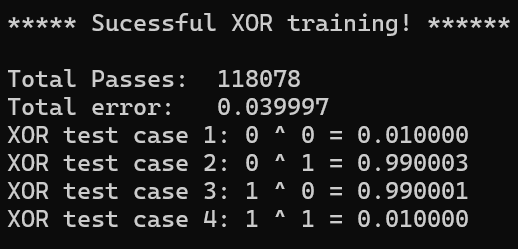

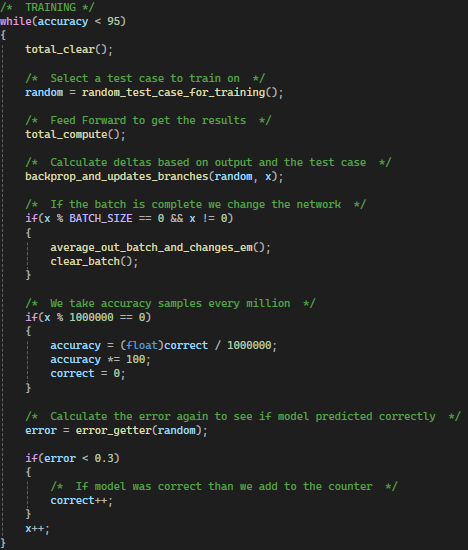

Before starting this project, I created two smaller neural networks to gain a better understanding of how they work. The first was designed to solve the XOR problem, a classic challenge in AI. This project helped me understand how neural networks learn patterns and how the training process works. The second network I built was trained to recognize numbers even when parts of them were missing. This taught me how neural networks can “infer” or generalize from incomplete data, based on patterns they’ve seen before. Both projects were essential in preparing me to design a more flexible neural network library from scratch.

Process:

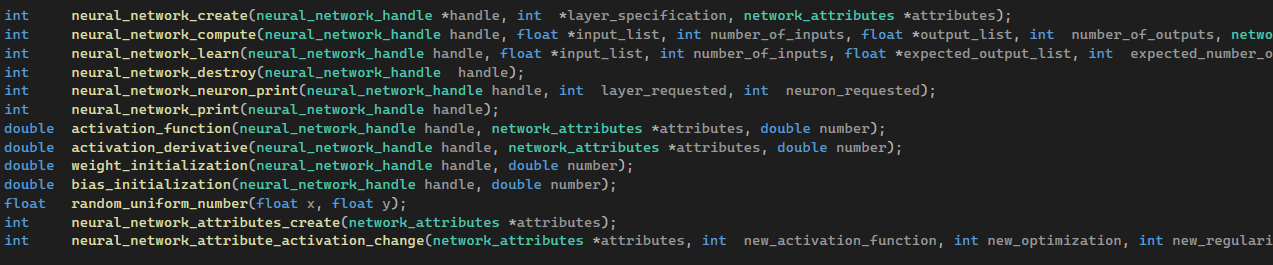

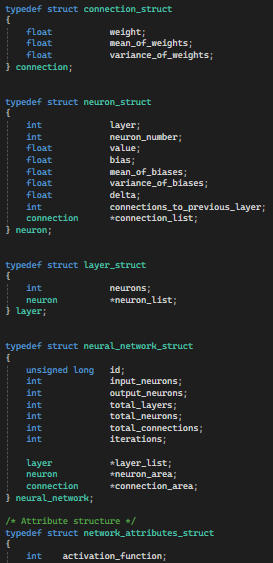

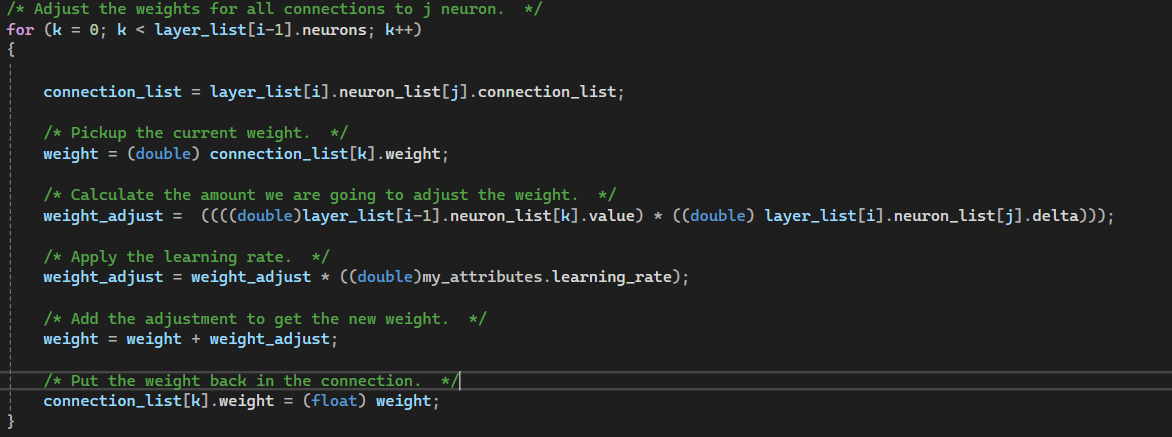

First, I created data structures for each part of the neural network, including layers, neurons, attributes, and connections. Then I developed an API that takes a handle to a neural network, layer specifications, and other attributes, initializing the network accordingly. Using the logic learned from my previous neural networks, I implemented the core learning and computing APIs, which accept inputs and generate outputs. After establishing this foundation, I added more API features to support customizable activation functions and optimizations. Throughout this process, I also received valuable guidance from an expert on designing flexible APIs, structuring the system effectively, and handling memory allocation, which helped me refine my approach and build a more adaptable library.

Challenges:

The biggest challenge I faced was making the neural network flexible enough to handle a wide range of problems. My previous neural networks were designed for specific tasks with fixed architectures, so I couldn’t easily change their structure. Although I could reuse the core logic from those projects, converting it into a system that supports any number of neurons and layers was difficult. Additionally, this was the first time I worked with large, complex data structures in my code, which made understanding and managing the project more challenging at first. I received guidance from an expert on strategies for structuring the code and managing memory, which allowed me to address these issues and build a more adaptable system.

Tools & Technologies:

C, Visual Studio, Neural Networks, Memory Allocation

Outcome:

The network can successfully handle various types of problems and will function effectively on small embedded systems. I plan on using this in the near future when I integrate neural networks into some of my hardware projects.

Reflection:

By not relying on any external AI libraries, I gained a deep, hands-on understanding of how neural networks work at a low level. I also learned how to write code that isn't limited to a single use case by making it flexible and adaptable through the use of data structures and APIs.